X without rules: without controls, hatred thrives

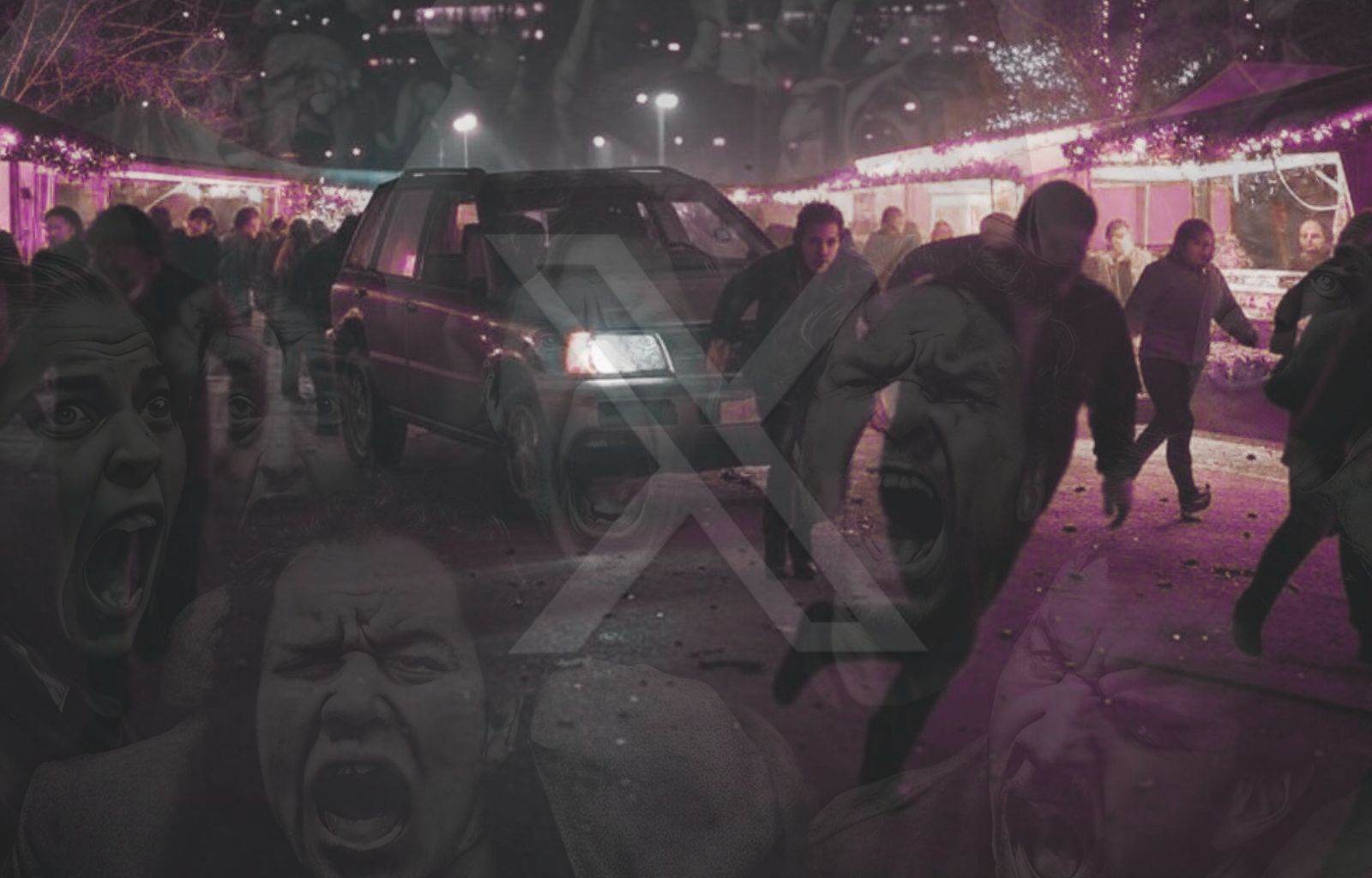

At nineteen minutes and four minutes on 20 December 2024, Taleb Abdulmohsen, a 50-year-old Saudi psychiatrist, drove a dark BMW into the last corner before the Magdeburg market square and ploughed full speed into the crowd, turning the Christmas market into a scene of terror. Having arrived in Germany in 2006, Abdulmohsen was known for his anti-Islamist activism and conspiracy views. Despite extradition requests from Saudi Arabia, Germany has always refused to hand him over.

Threats ignored

As information about the bomber’s identity emerges, his X profile reveals disturbing details. His posts include death threats against former Chancellor Angela Merkel, statements in which he says he is ready to kill 20 German citizens, and proclamations of war against Germany. In 2023, Abdulmohsen had asked his 48,000 followers on X:“Would you condemn me if I randomly killed 20 Germans for what Germany is doing against the Saudi opposition?” Four months before the attack, he had written in Arabic:“I assure you: if Germany wants war, we will have it. If Germany wants to kill us, we will slaughter them and die”. He had added: “Is there a way to justice without indiscriminately massacring German citizens?” Despite the seriousness of this content, neither the German authorities nor any moderators of the platform seem to have noticed anything, letting these extremist statements go unnoticed.

X: the platform without rules

Elon Musk‘s controversial management of X has drastically reduced control over content, turning the platform into a breeding ground for online extremism. After buying Twitter in 2022 and renaming it as X, Musk promised virtually unlimited freedom of speech. One of his first decisions was to remove moderators, removing all control over violent and extremist content. This choice encouraged conspiracists, racists and radicals of all political or religious orientations to return to X, turning the platform into a powerful amplifier of hatred.

An incubator of hatred

In the case of Taleb Abdulmohsen, even a little moderation could have made a difference. Explicitly violent and even explicitly homicidal statements, such as those posted on the profile, could have been detected and reported promptly to the relevant authorities. This could not only prevent tragedies like the one that occurred, but would also avoid the creation of a fertile environment for extremism and self-radicalisation. Such an environment fuels the phenomenon of ‘lone wolves,’ radicalised individuals online who act autonomously, evading traditional security checks and becoming a nightmare for counter-terrorist services globally.